PodResourceProfile Reacting on a ScaledObject

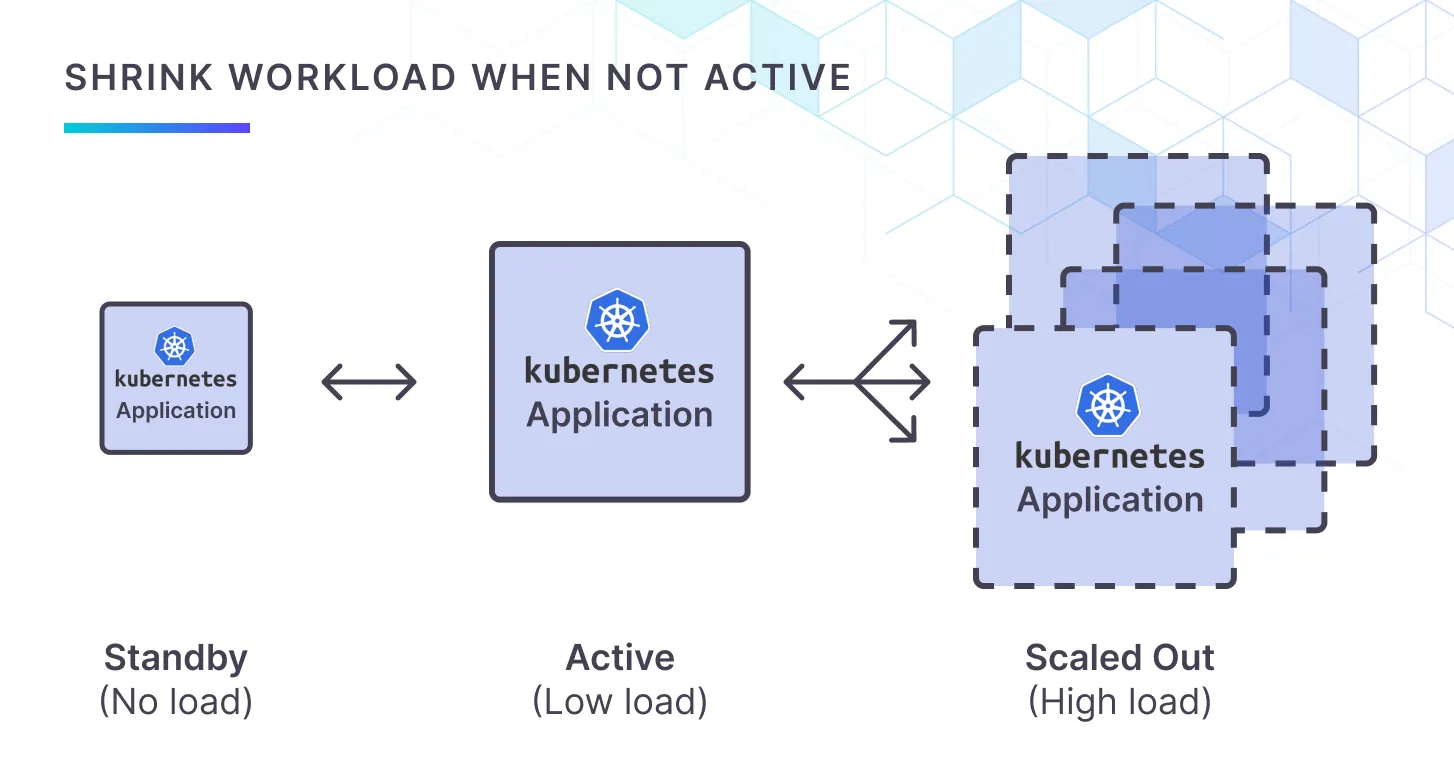

Most conversations about “scaling to zero” focus on replicas: spin every Pod down when demand drops, spin them back up later. That’s great if your app can cold‑start in milliseconds. But what if you still need a single instance alive (think health‑checks, long‑lived connections, or stubborn frameworks) yet you don’t want it hogging 250 MB of RAM while users are away?

Vertical shrinking is the missing half of the puzzle: keep the Pod running, just ask the kernel for less. With Kedify you can now do exactly that by combining two building blocks you already know:

- ScaledObject: drives horizontal scaling based on real‑time events.

- PodResourceProfile (PRP): performs in‑place CPU / memory right‑sizing whenever a trigger says so.

Prerequisites

Section titled “Prerequisites”- The

kubectlcommand line utility installed and accessible. - Connect your cluster in the Kedify Dashboard.

- If you do not have a connected cluster, you can find more information in the installation documentation.

Step 1: Make Sure Your Kubernetes Cluster Is Supported

Section titled “Step 1: Make Sure Your Kubernetes Cluster Is Supported”# create sample workloadkubectl create deployment nginx --image=nginx

# set some initial resourceskubectl set resources deployment nginx --requests=memory=250M

# make sure the pod will have a required annotation on itkubectl patch deployments.apps nginx --type=merge -p '{"spec":{"template": {"metadata":{"annotations": {"prp.kedify.io/reconcile": "enabled"}}}}}'

# finally, verify that resources can be modified w/o the need for pod's restartkubectl patch po $(kubectl get po -lapp=nginx -ojsonpath="{.items[0].metadata.name}") --type=json -p \ '[{"op":"replace","path":"/spec/containers/0/resources/requests/memory","value":"242M"}]'If the last command is executed correctly, you should be able to see a message similar to pod/nginx-6c7f8d766f-r49p9 patched.

Otherwise, make sure you are running Kubernetes in version 1.33 or higher, where In-place Resource Resize feature is enabled by default.

Another option is creating a sandbox k3d cluster, where we enable this feature:

k3d cluster create prp --no-lb \ --k3s-arg "--disable=traefik,servicelb@server:*" \ --k3s-arg "--kube-apiserver-arg=feature-gates=InPlacePodVerticalScaling=true@server:*"Step 2: Create a ScaledObject

Section titled “Step 2: Create a ScaledObject”Following ScaledObject uses one trigger of type cron that will require two replicas each 3 minutes.

So it is two replicas for intervals: 00-03, 06-09, 12-15, 18-21 .. Otherwise, the trigger is not active and number

of replicas will be set to minReplicaCount and .status.condition of the ScaledObject will be set to Passive.

This way we can demonstrate the activation and passivation changes and how different resource requests will be applied based on this status. However, any ScaledObject will work here and if you want to see some real-world example, check this section.

cat <<SO | kubectl apply -f -kind: ScaledObjectapiVersion: keda.sh/v1alpha1metadata: name: nginxspec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: nginx minReplicaCount: 1 maxReplicaCount: 2 advanced: horizontalPodAutoscalerConfig: behavior: scaleDown: stabilizationWindowSeconds: 1 scaleUp: stabilizationWindowSeconds: 1 triggers: - metadata: desiredReplicas: "2" # ┌───────────── minute (0 - 59) # │ ┌───────────── hour (0 - 23) # │ │ ┌───────────── day of the month (1 - 31) # │ │ │ ┌───────────── month (1 - 12) # │ │ │ │ ┌───────────── day of the week (0 - 6) (Sunday to Saturday) # │ │ │ │ │ OR sun, mon, tue, wed, thu, fri, sat # │ │ │ │ │ # │ │ │ │ │ # * * * * * start: "*/6 * * * *" end: "3-59/6 * * * *" # require two replicas each 6 minutes for the next 3 minutes (2 -> 1 -> 2 -> 1 ..) timezone: Europe/Prague type: cronSOStep 3: Create PRPs

Section titled “Step 3: Create PRPs”Assuming, you’ve installed Kedify, following custom resources should be reconciled by the built-in reconciler. Also make sure, the feature flag was enabled in the helm chart values.

cat <<PRPS | kubectl apply -f -apiVersion: keda.kedify.io/v1alpha1kind: PodResourceProfilemetadata: name: nginx-activespec: target: kind: scaledobject name: nginx containerName: nginx trigger: after: activated delay: 0s newResources: requests: memory: 250M---apiVersion: keda.kedify.io/v1alpha1kind: PodResourceProfilemetadata: name: nginx-standbyspec: target: kind: scaledobject name: nginx containerName: nginx trigger: after: deactivated delay: 5s newResources: requests: memory: 30MPRPSStep 4: Observe the Resource Patches

Section titled “Step 4: Observe the Resource Patches”Check the Requests

Section titled “Check the Requests”watch "kubectl get po -lapp=nginx -ojsonpath=\"{.items[*].spec.containers[?(.name=='nginx')].resources}\" | jq"Each three minutes there should be a change from Active to Passive or vice-versa and corresponding resources should be applied. The watch command should be displaying either:

{ "requests": { "memory": "250M" }}{ "requests": { "memory": "250M" }}or

{ "requests": { "memory": "30M" }}Alternatively, you can follow the k8s events:

kubectl get events --field-selector involvedObject.name=$(kubectl get po -lapp=nginx -ojsonpath="{.items[0].metadata.name}") -w3m6s Normal PodResourceProfileRequestsUpdated pod/nginx-6c7f8d766f-r49p9 Requests for container 'nginx' updated to 'memory: 250M'9s Normal PodResourceProfileRequestsUpdated pod/nginx-6c7f8d766f-r49p9 Requests for container 'nginx' updated to 'memory: 30M'