Pod Resource Profiles

Pod Resource Profiles describe the future update of a pod’s resources (CPU, memory) in place without requiring a pod restart. Since it doesn’t change the number of replicas of a workload, but instead works at the pod level by adjusting the resources of the container running in a pod, it functions as a vertical scaler.

In-place Updates

Section titled “In-place Updates”It allows resource adjustments for a container without requiring a container or pod restart. This feature must be enabled for the

Kubernetes cluster; otherwise, the patch operation will result in an error state. For more details, consult the

InPlacePodVerticalScaling feature gate.

Pod Resource Profile (PRP) CRD

Section titled “Pod Resource Profile (PRP) CRD”The Kedify agent contains a controller that reconciles PRP (Pod Resource Profiles) and also manages pods annotated with the following:

prp.kedify.io/reconcile: trueBased on the rules specified in the PRP custom resource, the controller either acts immediately or schedules an event for a later time.

Example PRP:

apiVersion: keda.kedify.io/v1alpha1kind: PodResourceProfilemetadata: name: podresourceprofile-samplespec: selector: # use either selector or spec.target matchLabels: app: nginx containerName: nginx # required - container name to update paused: false # optional, defaults to false priority: 0 # optional, defaults to 0 trigger: # allowed values: (de)activated, container{Ready,Started}, pod{Ready,Scheduled,Running} after: containerReady # optional, defaults to containerReady delay: 30s # required, examples: 20s, 1m, 90s, 2m30s, 2h newResources: # required - new requests and/or limits requests: memory: 50M cpu: 200mThis PodResourceProfile ensures that the container named nginx in a pod matching the specified selector (app=nginx) is updated

30 seconds after it becomes ready. Once the readiness probe passes, the timer starts, and the memory requests will be eventually set to

50 MB and CPU to 200 millicores.

The controller can be enabled or disabled on the Kedify Agent using the environment variable

PRP_ENABLED. By default, it is enabled. Additionally, the requirement for annotated pods can be turned off using the

PRP_REQUIRES_ANNOTATED_PODS environment variable. However, this may have performance implications since the controller

filters out pod events that do not change container or pod readiness status or are not referenced by a PRP resource.

Addressing Pods

Section titled “Addressing Pods”In the example above, a common label selector was used. It has the same spec as a Deployment’s selector, so anything that

can appear under deployment.spec.selector can be used here as well. Using the selector we can make the PodResourceProfile feature

available also for Jobs and CronJobs.

Another way to target pods is by using the target field.

For example:

target: kind: deployment name: nginxUsing selector and target is mutually exclusive. The allowed kinds for .spec.target field are:

deploymentstatefulsetdaemonsetscaledobject

It is assumed that the workload is present in the same namespace as the created PRP resource.

Triggers

Section titled “Triggers”Allowed values include:

containerReady: (default value) specifies whether the container is currently passing its readiness check. The value will change as readiness probes continue executing. If no readiness probes are specified, this field defaults to true once the container is fully started.- field:

pod.status.containerStatuses.ready - time:

pod.status.containerStatuses.state.running.startedAt

- field:

containerStarted: indicates whether the container has completed its postStart lifecycle hook and passed its startup probe. Initialized as false, it becomes true after the startupProbe is considered successful. Resets to false if the container is restarted or if kubelet temporarily loses state. In both cases, startup probes will run again. Always true if no startupProbe is defined, and the container is running and has passed the postStart lifecycle hook. The null value must be treated the same as false.- field:

pod.status.containerStatuses.started - time:

pod.status.containerStatuses.state.running.startedAt

- field:

podReady: indicates that the pod can service requests and should be added to the load balancing pools of all matching services.- field:

pod.status.conditions[?(.type=='Ready')].status - time:

pod.status.conditions[?(.type=='Ready')].lastTransitionTime

- field:

podScheduled: represents the status of the scheduling process for this pod.- field:

pod.status.conditions[?(.type=='PodScheduled')].status - time:

pod.status.conditions[?(.type=='PodScheduled')].lastTransitionTime

- field:

podRunning: indicates that the pod has been bound to a node and all containers have started. At least one container is still running or is being restarted.- field:

pod.status.phase - time:

pod.status.startTime

- field:

(de)activated: this value is only allowed when.spec.target.kindis set toscaledobjectand it will triggers itself based on the activation status of the associated ScaledObject.

ScaledObject & PodResourceProfile

Section titled “ScaledObject & PodResourceProfile”One of the allowed values for .spec.target.kind is also scaledobject. In this case, the trigger of such PodResourceProfile must be set to either activated or deactivated.

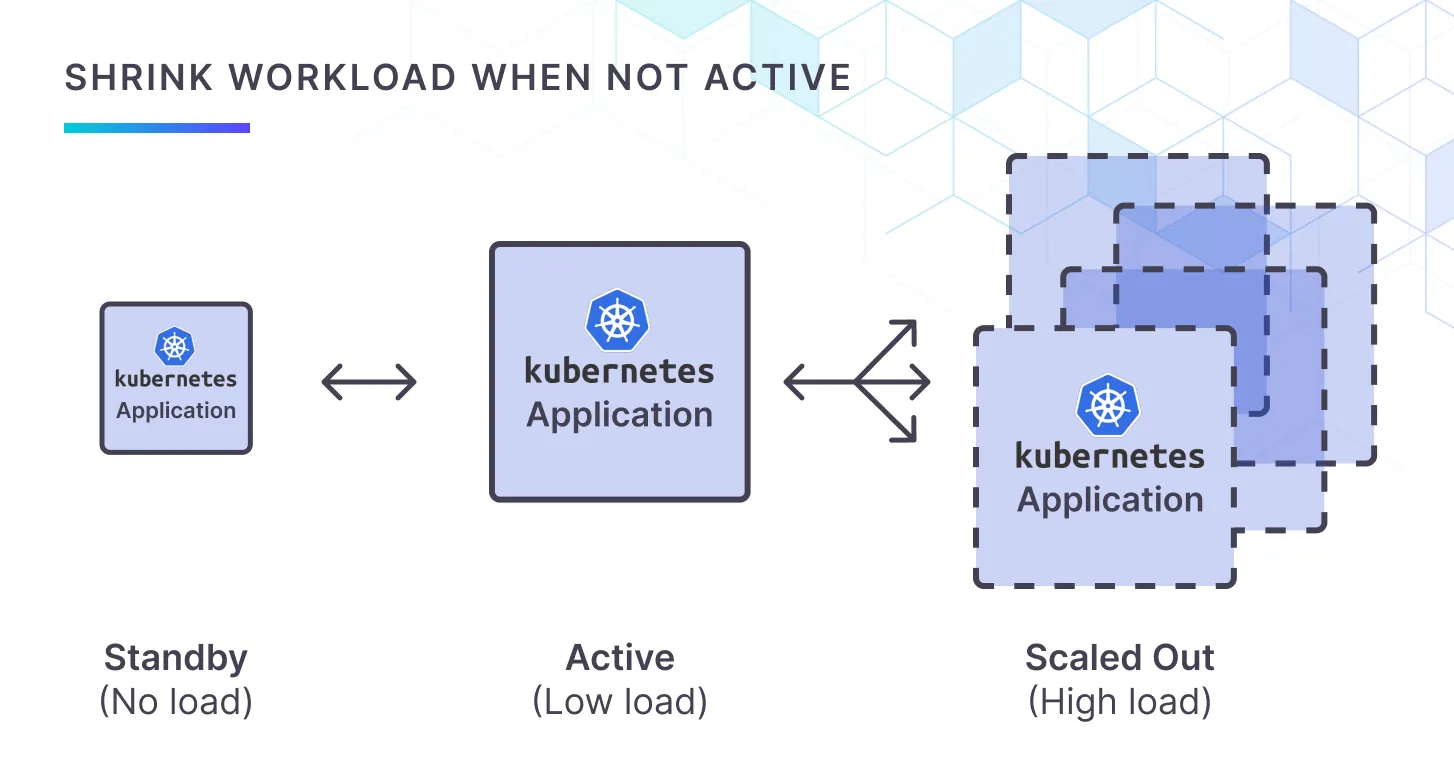

This way we can lower the requested resources when workload is idle. It is a similar feature as scale to zero replicas, however in this setup we shrink the pod size (in terms of resources) and not the number of replicas.

Quick recap: When does a ScaledObject change state?

Section titled “Quick recap: When does a ScaledObject change state?”- deactivated – event rate < targetValue for the configured cooldown period.

- activated – event rate ≥ targetValue (10 req/s in the example below).

These state flips are now first‑class PRP triggers.

Example:

Section titled “Example:”apiVersion: keda.kedify.io/v1alpha1kind: PodResourceProfilemetadata: name: nginx-activespec: target: kind: scaledobject name: nginx containerName: nginx trigger: after: activated delay: 0s newResources: requests: memory: 250M---apiVersion: keda.kedify.io/v1alpha1kind: PodResourceProfilemetadata: name: nginx-standbyspec: target: kind: scaledobject name: nginx containerName: nginx trigger: after: deactivated delay: 5s newResources: requests: memory: 30M---kind: ScaledObjectapiVersion: keda.sh/v1alpha1metadata: name: nginxspec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: nginx minReplicaCount: 1 maxReplicaCount: 8 triggers: - type: kedify-http metadata: hosts: www.my-app.com service: http-demo-service port: "8080" scalingMetric: requestRate targetValue: "10"Using nginx-standby PodResourceProfile, we will shrink the memory requests for the last replica (because minReplicaCount is set to 1) to 30 megabytes. The resources will be applied only after 5 seconds. This can help with the cold-start delays that are associated with the scale-to-zero use-case, when the application is not optimized for fast starts.

For requesting more resources for the workload in case of increased traffic, we can use the nginx-active prp. In which case, the ScaledObject nginx first needs to become active (at least 10 requests per seconds), then we set its requested amount of memory to 250 megabytes.

What we have effectively achieved by this setup is more effective allocation of resources in our Kubernetes cluster. When the nginx deployment is not being actively used, it can free some precious allocatable memory and let other workloads to fly. When combined with Karpenter this can boil down to savings for cloud bills.

Ready to Try?

Section titled “Ready to Try?”- Upgrade to the latest Kedify Agent (>= v0.2.16 for PRP‑ScaledObject support).

- Enable the InPlacePodVerticalScaling feature‑gate on your cluster if it’s not already on.

- Create your first “‑standby / ‑active” pair and watch your requested GBs plummet.

Have questions or want hands‑on help benchmarking savings? Ping us on Slack or book a 15‑minute chat. We love swapping scaling war stories.

Use-cases

Section titled “Use-cases”Pod Resource Profiles are useful in scenarios where workloads exhibit predictable resource consumption behavior. Certain application frameworks require a significant amount of memory or CPU during startup for initialization but then need less during steady operation.

Another example could be a job that runs to completion but requires different computational resources at different stages. Instead of allocating the maximum resources for all phases, the PRP can match the workload’s actual utilization profile, allowing for more efficient bin packing by the Kubernetes scheduler.

The current design allows multiple PRP resources to target the same pods. In such cases, matching PRPs are sorted first by priority

(.spec.priority), followed by the delay. The PRP with the smallest unapplied delay is selected over one with a higher delay.

If multiple PRPs still match, they are sorted alphabetically, with the “smaller” one winning. This enables multiple PRPs to be set up

for the same workload, changing resource allocations multiple times throughout the pod’s lifecycle.

Quick Start

Section titled “Quick Start”# Prepare a cluster with the feature enabledk3d cluster create dyn-resources --no-lb --k3s-arg "--disable=traefik,servicelb@server:*" \ --k3s-arg "--kube-apiserver-arg=feature-gates=InPlacePodVerticalScaling=true@server:*"# Install Kedify agent...Create a sample deployment with nginx that has one pod. It will have 45MB memory requested.

# Create a deploymentkubectl create deployment nginx --image=nginx

# Wait for it to become readykubectl rollout status deploy/nginx

# Set resource requests to observe changeskubectl set resources deployment nginx --requests=memory=40Mi

# Add the required annotation to the podskubectl patch deployments.apps nginx --type=merge -p \ '{"spec":{"template": {"metadata":{"annotations": {"prp.kedify.io/reconcile": "enabled"}}}}}'

# Verify that in-place patches on resources workkubectl patch po $(kubectl get po -lapp=nginx -ojsonpath="{.items[0].metadata.name}") --type=json \ -p '[{"op":"replace","path":"/spec/containers/0/resources/requests/memory","value":"45Mi"}]'# If the request fails, ensure the Kubernetes cluster has the InPlacePodVerticalScaling feature onNow, let’s create a crd and after 20 seconds the resources will be changed to 30MB.

# Create a PRP CRD for the controllercat <<PRP | kubectl apply -f -apiVersion: keda.kedify.io/v1alpha1kind: PodResourceProfilemetadata: name: nginxspec: selector: matchLabels: app: nginx containerName: nginx trigger: delay: 20s newResources: requests: memory: 30MPRPFinally check if everything works as expected.

# Check the PRP resourcekubectl get prp -owide

# After some timekubectl get po -lapp=nginx -ojsonpath="{.items[*].spec.containers[?(.name=='nginx')].resources}" | jq